AI is here, there, and everywhere now, in the apps you use, ads you do not see (or see too much), recommendations you might or might not abide by, and tools to facilitate your better work.

The speed of innovation did lead to a second issue: a towering pile of jargon. This Ultimate AI Glossary tells you the most important terminology of AI in layman’s terms, related to real-life examples you can actually picture, and links ideas so you understand not only what something is but why it matters.

This is not an AI terminology dictionary; it is a glossary that describes the concepts in plain language, relates them to real-world examples and dispels misconceptions so that you get a clear picture of what is going on in AI trends.

Whether you’re exploring machine learning terminology, trying to decode natural language processing (NLP) concepts, or learning the difference between deep learning and traditional AI, this glossary will help you.

Why You Need an Ultimate AI Glossary in 2025?

AI has crept into everything from healthcare to education, marketing, entertainment and even law enforcement. Not only that even those who created AI means engineers also worried that if in future AI replace the software engineers.

Whether you are in school, a business owner, or just interested, these terms will come in handy:

- Make smarter career choices

- Understand tech news and trends

- Use AI tools more effectively

- Avoid being misled by hype

In other words, AI literacy is no longer optional.

Note: Nowadays, online small business owner, SEO specialist, digital marketer, etc., started applying Chatgpt Seo in their website to rank AI search.

Core Concepts: The Foundation

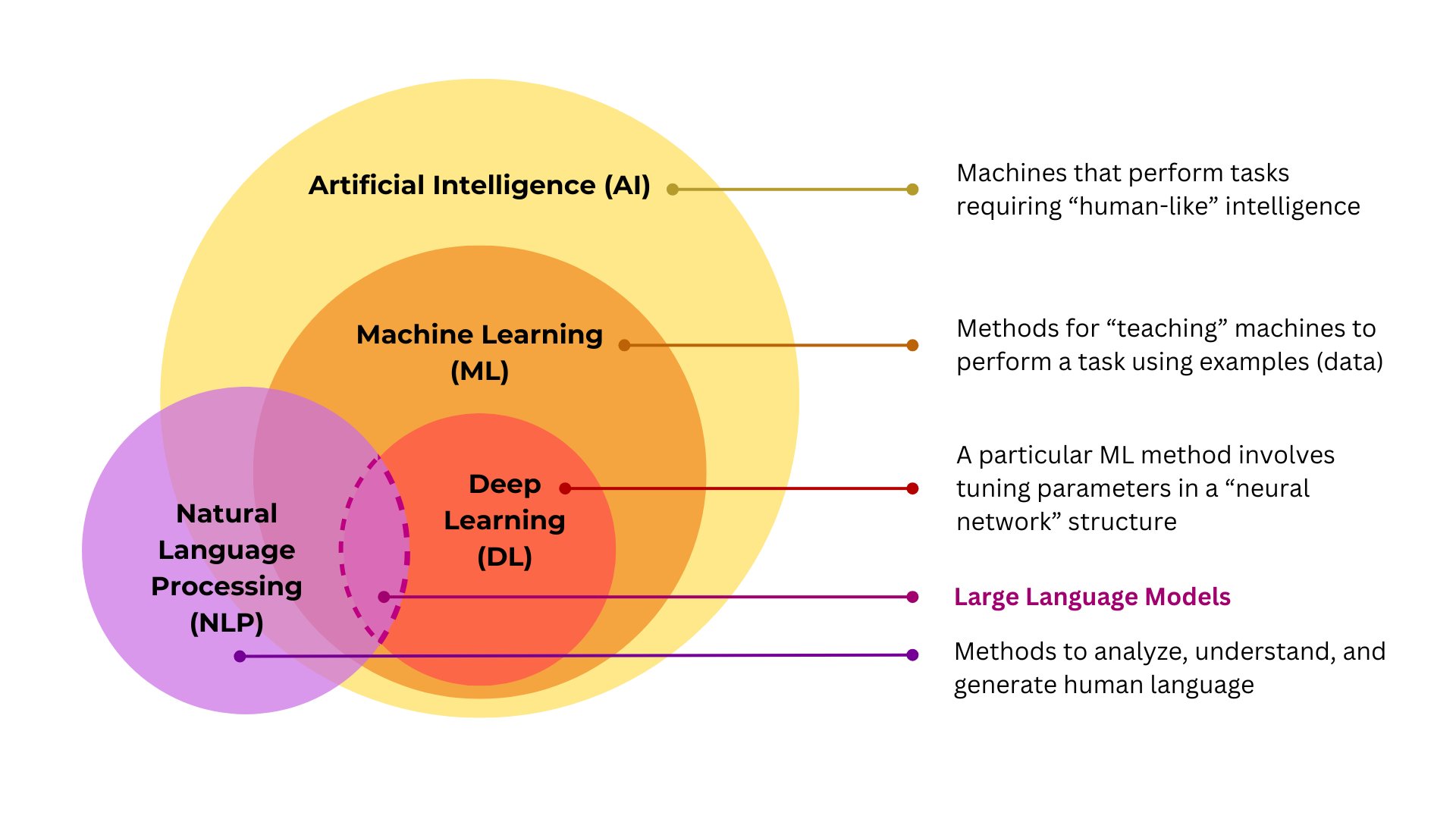

- Artificial Intelligence (AI): Systems that do things once done by people using brains: speech recognition, image processing, pattern recognition & response selection. Think of AI as the umbrella.

- Machine Learning (ML): A kind of AI where systems learn from examples (data). Instead of coding rules (“if X then Y”), ML uncovers patterns in data and applies those patterns to predict.

- Deep Learning: A very simple software development toolbox, in particular for layered neural networks to model complex patterns (images, raw Audio, long text). The recent breakthroughs in image generation and large language models (Like, China’s Deepseek) were powered by deep learning.

- Why this matters: AI = the goal. ML = how many teams reach the goal. Deep learning = the current best approach for highly complex, high-volume tasks.

The Machine-Learning Family: How Models Learn

Supervised learning

- What: Train on labeled data (input > correct output).

- Example: Train on thousands of X-ray images labeled “fracture” or “no fracture” so the model learns to predict new cases.

- When to use: Classification and regression problems where labels exist.

Unsupervised learning

- What: Model finds structure without labels.

- Example: Customer segmentation, grouping shoppers into clusters by behavior to tailor marketing.

- When to use: Exploratory analysis and anomaly detection.

Reinforcement learning (RL)

- What: An agent learns by interacting with an environment and receiving rewards or penalties.

- Example: A logistics system that learns optimal routing by trial and error to minimize delivery time.

- When to use: Decision-making tasks with feedback loops, robotics, games, and dynamic pricing.

Self-supervised learning

- What: Models learn from unlabeled data by creating proxy tasks (e.g., predict missing parts).

- Example: A language model learns next-word prediction on huge corpora without explicit labels.

- Why it’s big: Scales learning to massive, unlabeled datasets, backbone of modern LLMs.

Few-shot / Zero-shot learning

- What: Models generalize to new tasks with very few or no examples.

- Example: Give a model two examples of a new email format (a few shots) and get it to generate similar emails.

- Why useful: Reduces the need for expensive labeled datasets.

Deep Learning & Neural Architectures: The Engines

- Neural networks: Layers of computation (neurons) that turn their inputs into outputs. Its depth and width enable it to model complex functions.

- Convolutional Neural Networks (CNNs): Optimized for images. Medical Imaging (detect lesions), Manufacturing (defect detection), Self-driving Perception.

- Recurrent Neural Networks (RNNs) / LSTMs: Earlier favorites (time series, speech) for denominator data. Mostly supplanted by transformers for many language tasks.

- Transformers: The architecture that many language models and vision models are built on today. They employ attention to do this, and in the process make it possible for these models to learn long-range dependencies in text or image patches.

- Embeddings: Numeric representations that place similar items near each other in a vector space. Example: embedding “Paris” and “France” close together helps search and recommendations.

Fine-tuning vs. training from scratch

- Fine-tuning: Using a pre-trained model as the foundation model and (fine)tuning it on new data with fewer examples. Fast and cost-effective.

- From scratch: Train a model from random weights. It is costly, and this usually happens when the dataset or domain knowledge is very special.

Why this matters in practice: Companies prefer pre-trained transformer-based models and fine-tune them for a particular task, such as RAG chatbots for internal docs or fine-tuning an image model for domain-specific medical scans.

Natural Language Processing (NLP): The Language Layer

- Tokenization: Token = a unit of text (such as words, subwords). The selection of Tokenization also affects model size and behavior.

- Large Language Models (LLMs): scale = capability. LLMs are methods that generate or comprehend text trained on large corpora of text. Drafting emails, responding to customer inquiries, and summarizing documents.

- Prompt engineering: Crafting the input for a generative model to generate better output. Practical patterns: Examples like Few-shot prompts (exemplifies), Role prompts (“You are a supportive copy editor”), and Constrained Prompts (details of format in output).

- Retrieval-Augmented Generation (RAG): Combine a search step with generation: extract the relevant documents from a knowledge base and generation of answers based on these documents. This reduces hallucinations and increases fidelity for domain-specific systems.

- Hallucinations: When a model generates realistic-sounding but spurious information. Something to note in the healthcare, finance, and legal realms.

- Real-world example: A couple of years later, a law firm creates a GDPR-compliance assistant using RAG. The assistant fetches rules from firm policy docs and, by way of fancy passage snippets, ensures confident but (uncited) hallucination.

Computer Vision & Multimodal AI: The Visual And Combined Senses

- Computer Vision: Models that analyze images and videos. Common tasks, object detection, segmentation, image classification.

- Multimodal models: Suitable to handle text + Image (Sometimes Audio). E.g., an app that takes a photo of a broken item and voice description to give repair steps

- Edge AI (on-device): Run vision models on phones or cameras for low latency and privacy (e.g., face-unlock, real-time safety alerts on factory floors).

Generative AI: Creativity At Scale

- Generative models: Create new content, text, images, music, video, or code.

- Text generation: Natural text for summaries, customer replies, creative storytelling, etc., by LLMs. Used by marketers and as code snippets.

- Image generation: Diffusion or transformer-based image models used as a prompt. They are used by designers to prototype ad creatives and concept art.

- Music & video generation: Early but growing: composers and film editors experiment with AI for scoring and rough cut generation.

- Responsible use example: With it, a marketing team can create concepts with a fast generative system of images and employ human designers for creating brand-safe finals, taking advantage of speed without having to worry about copyright claims or style drift.

Data, Evaluation, And Deployment: Turning Models Into Products

- Training data quality: A biased model architecture can still only ever produce biased outputs, and no amount of model architecture can compensate for a lack of signal in the data.

- Validation & test sets: Used to estimate real-world performance. Cross-validation is useful when the data is small.

- Metrics: Choose the right one: accuracy, precision, recall, F1 for classification; BLEU/ROUGE for text; mean average precision for detection. Focus on business metrics (revenue, retention, time saved) that matter in the end.

- A/B testing in production: Roll out model changes gradually and measure real user impact.

- Monitoring & model drift: Models degrade over time as data distributions shift. Production systems need logging, alerts, and retraining pipelines.

- Infrastructure notes: GPUs or cloud TPUs for training; scalable API endpoints for inference; vector databases for embedding search.

Ethics, Governance, And Safety: The Human Side

- Bias & fairness: Models can reproduce or amplify social biases from training data. Practical mitigation: attacking diverse datasets, fairness-aware training, and auditing.

- Explainability: Critical in regulated fields. Explainable AI trends and techniques (feature importance, counterfactuals) help users and auditors understand model behavior.

- Privacy & data protection: Risk is reduced by anonymization, differential privacy and data minimization policies. Many deployments have to comply with laws like GDPR and other regional regulations.

- Human-in-the-loop (HITL): combining automated outputs with human review for high-stakes use cases. Example: radiologists confirm AI-flagged scans before a patient treatment decision.

- Operational security: Guard model endpoints, disallowing prompt-injection and data leakage, specify who can fine-tune or deploy models.

Practical Uses: How to Use This Glossary While Building or Buying AI

- Start from the problem, not the model. Ask: What do we need the business outcome to be? After that, map the optimal method (classification, detection, generation).

- Prototype fast with pre-trained models. Use a small RAG or fine-tuning approach before investing in full training.

- Measure real user outcomes. Click-through or accuracy numbers are helpful, but revenue, time saved, and error reduction matter more.

- Design guardrails early. Consider privacy, explainability, and an escalation path for mistakes.

- Plan for maintenance. Models require retraining and monitoring, budget for model ops.

Compact Cheat-Sheet: One-Line Definitions (Easy Learning)

| Alphabet | AI-Terminology | Explanation |

|---|---|---|

| A | Agentic AI | Systems that act autonomously to complete user goals. |

| B | Backpropagation | Algorithm for updating neural network weights during training. |

| C | Confidence score | Model’s internal estimate of how sure it is about an answer. |

| D | Diffusion model | Image generator that denoises random noise into a picture. |

| E | Embedding | Numeric vector representing a piece of data (word, image). |

| F | Fine-tuning | Adapting a pre-trained model to a specific task. |

| G | GAN (Generative Adversarial Network) | Generator + discriminator duel to produce realistic samples. |

| H | Hallucination | Model assertively producing incorrect facts. |

| I | Inference | Running a model to get predictions. |

| J | Jupyter Notebook | Interactive environment popular for data science. |

| K | Knowledge graph | Structured representation of facts and relationships. |

| L | Latent space | Abstract vector space where embeddings live. |

| M | Multimodal | Handling multiple data types (text + image). |

| N | Natural Language Understanding (NLU) | Model’s ability to grasp meaning. |

| O | Overfitting | Model learns noise in training data and fails on new data. |

| P | Prompt | Input instructions to a generative model. |

| Q | Quantization | Reducing model precision to shrink size/performance cost. |

| R | RAG (Retrieval-Augmented Generation) | Combine search with generation for grounded output. |

| S | Supervised learning | Trained on labeled examples. |

| T | Transformer | Neural network architecture using attention. |

| U | Unsupervised learning | Finds patterns without labels. |

| V | Vector DB | Stores embeddings for fast similarity search. |

| W | Weights | Numeric parameters learned during training. |

| X | XAI (Explainable AI) | Methods to explain model decisions. |

| Y | YOLO | Fast real-time object detector. |

| Z | Zero-shot learning | Model handles tasks it hasn’t been explicitly trained on. |

FAQs

Artificial Intelligence (AI) is a smart computer system that performs tasks typically requiring human intelligence, such as decision-making, problem-solving, and learning from data.

AI accomplishes tasks that are repetitive in nature, helps analysing a large volume of data in a short time and makes informative suggestions on which the companies can work faster with fewer errors.

Yes. With AI chatbots and virtual assistants, you can address every question that your customers have in real-time, providing 24/7 support and answering multiple queries simultaneously.

Not always. Today, there are numerous AI tools at affordable costs, and small businesses can begin with something simple like chatbots, email automation or AI-assisted analytics without having to make a significant investment.

They use AI in product recommendations on shopping sites, fraud detection in banks, self-driving car technology, and healthcare diagnosis tools, as well as marketing campaign personalization.

Conclusion: How to Use This Knowledge

This Ultimate AI Glossary is supposed to be helpful. Bookmark it, keep the cheat sheet handy when you are reading industry articles, and come back in case you need to map a project or consider your tool stack.

The words matter, not because they give us precision in our technical and business decision-making.

If you are undertaking AI development, concentrate on data quality, model evaluation and production monitoring. If you are an AI buyer, you should require explainability/interpretability, privacy guarantees, and measurable business operational metrics.

Technology will keep advancing at a faster pace, but the best way to secure value is always going to be by keeping it thoughtful and user- or human-centered.